2014-04-11

Preventing heartbleed bugs with safe programming languages

The Heartbleed bug in OpenSSL has resulted in a fair amount of damage across the internet. The bug itself was quite simple and is a textbook case for why programming in unsafe languages like C can be problematic.

As an experiment to see if a safer systems programming language could have prevented the bug I tried rewriting the problematic function in the ATS programming language. I've written about ATS as a safer C before. This gives a real world testcase for it. I used the latest version of ATS, called ATS2.

ATS compiles to C code. The function interfaces it generates can exactly match existing C functions and be callable from C. I used this feature to replace the dtls1_process_heartbeat and tls1_process_heartbeat functions in OpnSSL with ATS versions. These two functions are the ones that were patched to correct the heartbleed bug.

The approach I took was to follow something similar to that outlined by John Skaller on the ATS mailing list:

ATS on the other hand is basically C with a better type system.

You can write very low level C like code without a lot of the scary

dependent typing stuff and then you will have code like C, that

will crash if you make mistakes.

If you use the high level typing stuff coding is a lot more work

and requires more thinking, but you get much stronger assurances

of program correctness, stronger than you can get in Ocaml

or even Haskell, and you can even hope for *better* performance

than C by elision of run time checks otherwise considered mandatory,

due to proof of correctness from the type system. Expect over

50% of your code to be such proofs in critical software and probably

90% of your brain power to go into constructing them rather than

just implementing the algorithm. It's a paradigm shift.

I started with wrapping the C code directly and calling that from ATS. From there I rewrote the C code into unsafe ATS. Once that worked I added types to find errors.

I've put the modified OpenSSl code in a github fork. The two branches there, ats and ats_safe, represent the latter two stages in implementing the functions in ATS.

I'll give a quick overview of the different paths I took then go into some detail about how I used ATS to find the errors.

Wrapping C code

I've written about this approach before. ATS allows embedding C directly so the first start was to embed the dtls1_process_heartbeat C code in an ATS file, call that from an ATS function and expose that ATS function as the real dtls1_process_heartbeat. The code for this is in my first attempt of d1_both.dats.

Unsafe ATS

The second stage was to write the functions using ATS but unsafely. This code is a direct translation of the C code with no additional typechecking via ATS features. It uses usafe ATS code. The rewritten d1_both.dats contains this version.

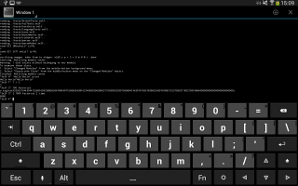

The code is quite ugly but compiles and matches the C version. When installed on a test system it shows the heartbleed bug still. It uses all the pointer arithmetic and hard coded offsets as the C code. Here's a snippet of one branch of the function:

val buffer = OPENSSL_malloc(1 + 2 + $UN.cast2int(payload) + padding)

val bp = buffer

val () = $UN.ptr0_set<uchar> (bp, TLS1_HB_RESPONSE)

val bp = ptr0_succ<uchar> (bp)

val bp = s2n (payload, bp)

val () = unsafe_memcpy (bp, pl, payload)

val bp = ptr_add (bp, payload)

val () = RAND_pseudo_bytes (bp, padding)

val r = dtls1_write_bytes (s, TLS1_RT_HEARTBEAT, buffer, 3 + $UN.cast2int(payload) + padding)

val () = if r >=0 && ptr_isnot_null (get_msg_callback (s)) then

call_msg_callback (get_msg_callback (s),

1, get_version (s), TLS1_RT_HEARTBEAT,

buffer, $UN.cast2uint (3 + $UN.cast2int(payload) + padding), s,

get_msg_callback_arg (s))

val () = OPENSSL_free (buffer)

It should be pretty easy to follow this comparing the code to the C version.

Safer ATS

The third stage was adding types to the unsafe ATS version to check that the pointer arithmetic is correct and no bounds errors occur. This version of d1_both.dats fails to compile if certain bounds checks aren't asserted. If the assertloc at line 123, line 178 or line 193 is removed then a constraint error is produced. This error is effectively preventing the heartbleed bug.

Testable Vesion

The last stage I did was to implement the fix to the tls1_process_heartbeat function and factor out some of the helper routines so it could be shared. This is in the ats_safe branch which is where the newer changes are happening. This version removes the assertloc usage and changes to graceful failure so it could be tested on a live site.

I tested this version of OpenSSL and heartbleed test programs fail to dump memory.

The approach to safety

The tls_process_heartbeat function obtains a pointer to data provided by the sender and the amount of data sent from one of the OpenSSL internal structures. It expects the data to be in the following format:

byte = hbtype

ushort = payload length

byte[n] = bytes of length 'payload length'

byte[16]= padding

The existing C code makes the mistake of trusting the 'payload length' the sender supplies and passes that to a memcpy. If the actual length of the data is less than the 'payload length' then random data from memory gets sent in the response.

In ATS pointers can be manipulated at will but they can't be dereferenced or used unless there is a view in scope that proves what is located at that memory address. By passing around views, and subsets of views, it becomes possible to check that ATS code doesn't access memory it shouldn't. Views become like capabilities. You hand them out when you want code to have the capability to do things with the memory safely and take it back when it's done.

Views

To model what the C code does I created an ATS view that represents the layout of the data in memory:

dataview record_data_v (addr, int) =

| {l:agz} {n:nat | n > 16 + 2 + 1} make_record_data_v (l, n) of (ptr l, size_t n)

| record_data_v_fail (null, 0) of ()

A 'view' is like a standard ML datatype but exists at type checking time only. It is erased in the final version of the program so has no runtime overhead. This view has two constructors. The first is for data held at a memory address l of length n. The length is constrained to be greater than 16 + 2 + 1 which is the size of the 'byte', 'ushort' and 'padding' mentioned previously. By putting the constraint here we immediately force anyone creating this view to check the length they pass in. The second constructor, record_data_v_fail, is for the case of an invalid record buffer.

The function that creates this view looks like:

implement get_record (s) =

val len = get_record_length (s)

val data = get_record_data (s)

in

if len > 16 + 2 + 1 then

(make_record_data_v (data, len) | data, len)

else

(record_data_v_fail () | null_ptr1 (), i2sz 0)

end

Here the len and data are obtained from the SSL structure. The length is checked and the view is created and returned along with the pointer to the data and the length. If the length check is removed there is a compile error due to the constraint we placed earlier on make_record_data_v. Calling code looks like:

val (pf_data | p_data, data_len) = get_record (s)

p_data is a pointer. data_len is an unsigned value and pf_data is our view. In my code the pf_ suffix denotes a proof of some sort (in this case the view) and p_ denotes a pointer.

Proof functions

In ATS we can't do anything at all with the p_data pointer other than increment, decrement and pass it around. To dereference it we must obtain a view proving what is at that memory address. To get speciailized views specific for the data we want I created some proof functions that convert the record_data_v view to views that provide access to memory. These are the proof functions:

(* These proof functions extract proofs out of the record_data_v

to allow access to the data stored in the record. The constants

for the size of the padding, payload buffer, etc are checked

within the proofs so that functions that manipulate memory

are checked that they remain within the correct bounds and

use the appropriate pointer values

*)

prfun extract_data_proof {l:agz} {n:nat}

(pf: record_data_v (l, n)):

(array_v (byte, l, n),

array_v (byte, l, n) -<lin,prf> record_data_v (l,n))

prfun extract_hbtype_proof {l:agz} {n:nat}

(pf: record_data_v (l, n)):

(byte @ l, byte @ l -<lin,prf> record_data_v (l,n))

prfun extract_payload_length_proof {l:agz} {n:nat}

(pf: record_data_v (l, n)):

(array_v (byte, l+1, 2),

array_v (byte, l+1, 2) -<lin,prf> record_data_v (l,n))

prfun extract_payload_data_proof {l:agz} {n:nat}

(pf: record_data_v (l, n)):

(array_v (byte, l+1+2, n-16-2-1),

array_v (byte, l+1+2, n-16-2-1) -<lin,prf> record_data_v (l,n))

prfun extract_padding_proof {l:agz} {n:nat} {n2:nat | n2 <= n - 16 - 2 - 1}

(pf: record_data_v (l, n), payload_length: size_t n2):

(array_v (byte, l + n2 + 1 + 2, 16),

array_v (byte, l + n2 + 1 + 2, 16) -<lin, prf> record_data_v (l, n))

Proof functions are run at type checking time. They manipulate proofs. Let's breakdown what the extract_hbtype_proof function does:

prfun extract_hbtype_proof {l:agz} {n:nat}

(pf: record_data_v (l, n)):

(byte @ l, byte @ l -<lin,prf> record_data_v (l,n))

This function takes a single argument, pf, that is a record_data_v instance for an address l and length n. This proof argument is consumed. Once it is called it cannot be accessed again (it is a linear proof). The function returns two things. The first is a proof byte @ l which says "there is a byte stored at address l". The second is a linear proof function that takes the first proof we returned, consumes it so it can't be reused, and returns the original proof we passed in as an argument.

This is a fairly common idiom in ATS. What it does is takes a proof, destroys it, returns a new one and provides a way of destroying the new one and bring back the old one. Here's how the function is used:

prval (pf, pff) = extract_hbtype_proof (pf_data)

val hbtype = $UN.cast2int (!p_data)

prval pf_data = pff (pf)

prval is a declaration of a proof variable. pf is my idiomatic name for a proof and pff is what I use for proof functions that destroy proofs and return the original.

The !p_data is similar to *p_data in C. It dereferences what is held at the pointer. When this happens in ATS it searches for a proof that we can access some memory at p_data. The pf proof we obtained says we have a byte @ p_data so we get a byte out of it.

A more complicated proof function is:

prfun extract_payload_length_proof {l:agz} {n:nat}

(pf: record_data_v (l, n)):

(array_v (byte, l+1, 2),

array_v (byte, l+1, 2) -<lin,prf> record_data_v (l,n))

The array_v view repesents a contigous array of memory. The three arguments it takes are the type of data stored in the array, the address of the beginning, and the number of elements. So this function consume the record_data_v and produces a proof saying there is a two element array of bytes held at the 1st byte offset from the original memory address held by the record view. Someone with access to this proof cannot access the entire memory buffer held by the SSL record. It can only get the 2 bytes from the 1st offset.

Safe memcpy

One more complicated view:

prfun extract_payload_data_proof {l:agz} {n:nat}

(pf: record_data_v (l, n)):

(array_v (byte, l+1+2, n-16-2-1),

array_v (byte, l+1+2, n-16-2-1) -<lin,prf> record_data_v (l,n))

This returns a proof for an array of bytes starting at the 3rd byte of the record buffer. Its length is equal to the length of the record buffer less the size of the payload, and first two data items. It's used during the memcpy call like so:

prval (pf_dst, pff_dst) = extract_payload_data_proof (pf_response)

prval (pf_src, pff_src) = extract_payload_data_proof (pf_data)

val () = safe_memcpy (pf_dst, pf_src

add_ptr1_bsz (p_buffer, i2sz 3),

add_ptr1_bsz (p_data, i2sz 3),

payload_length)

prval pf_response = pff_dst(pf_dst)

prval pf_data = pff_src(pf_src)

By having a proof that provides access to only the payload data area we can be sure that the memcpy can not copy memory outside those bounds. Even though the code does manual pointer arithmetic (the add_ptr1_bszfunction) this is safe. An attempt to use a pointer outside the range of the proof results in a compile error.

The same concept is used when setting the padding to random bytes:

prval (pf, pff) = extract_padding_proof (pf_response, payload_length)

val () = RAND_pseudo_bytes (pf |

add_ptr_bsz (p_buffer, payload_length + 1 + 2),

padding)

prval pf_response = pff(pf)a

Runtime checks

The code does runtime checks that constrain the bounds of various length variables:

if payload_length > 0 then

if data_len >= payload_length + padding + 1 + 2 then

...

...

Without those checks a compile error is produced. The original heartbeat flaw was the absence of similar runtime checks. The code as structured can't suffer from that flaw and still be compiled.

Testing

This code can be built and tested. First step is to install ATS2:

$ tar xvf ATS2-Postiats-0.0.7.tgz

$ cd ATS2-Postiats-0.0.7

$ ./configure

$ make

$ export PATSHOME=`pwd`

$ export PATH=$PATH:$PATSHOME/bin

Then compile the openssl code with my ATS additions:

$ git clone https://github.com/doublec/openssl

$ cd openssl

$ git checkout -b ats_safe origin/ats_safe

$ ./config

$ make

$ make test

Try changing some of the code, modifying the constraints tests etc, to get an idea of what it is doing.

For testing in a VM, I installed Ubuntu, setup an nginx instance serving an HTTPS site and did something like:

$ git clone https://github.com/doublec/openssl

$ cd openssl

$ git diff 5219d3dd350cc74498dd49daef5e6ee8c34d9857 >~/safe.patch

$ cd ..

$ apt-get source openssl

$ cd openssl-1.0.1e/

$ patch -p1 <~/safe.patch

...might need to fix merge conflicts here...

$ fakeroot debian/rules build binary

$ cd ..

$ sudo dpkg -i libssl1.0.0_1.0.1e-3ubuntu1.2_amd64.deb \

libssl-dev_1.0.1e-3ubuntu1.2_amd64.deb

$ sudo /etc/init.d/nginx restart

You can then use a heartbleed tester on the HTTPS server and it should fail.

Conclusion

I think the approach of converting unsafe C code piecemeal worked quite well in this instance. Being able to combine existing C code and ATS makes this much easier. I only concentrated on detecting this particular programming error but it would be possible to use other ATS features to detect memory leaks, abstraction violations and other things. It's possible to get very specific in defining safe interfaces at a cost of complexity in code.

Although I've used ATS for production code this is my first time using ATS2. I may have missed idioms and library functions to make things easier so try not to judge the verbosity or difficulty of the code based on this experiement. The ATS community is helpful in picking up the language. My approach to doing this was basically add types then work through the compiler errors fixing each one until it builds.

One immediate question becomes "How do you trust your proof". The record_data_v view and the proof functions that manipulate it define the level of checking that occurs. If they are wrong then the constraints checked by the program will be wrong. It comes down to having a trusted kernel of code (in this case the proof and view) and users of that kernel can then be trusted to be correct. Incorrect use of the kernel is what results in the stronger safety. From an auditing perspective it's easier to check the small trusted kernel and then know the compiler will make sure pointer manipulations are correct.

The ATS specific additions are in the following files: